Ollama

Run Producer Pal completely offline with local models.

What You Need

- Ollama installed

- Ableton Live 12.3+ with Max for Live

1. Install Ollama

Download and install Ollama for your operating system.

2. Download a Model

Download a model that supports tools. Some good options include:

qwen3devstral-small-2gpt-oss

Browse models with tool support on the Ollama website.

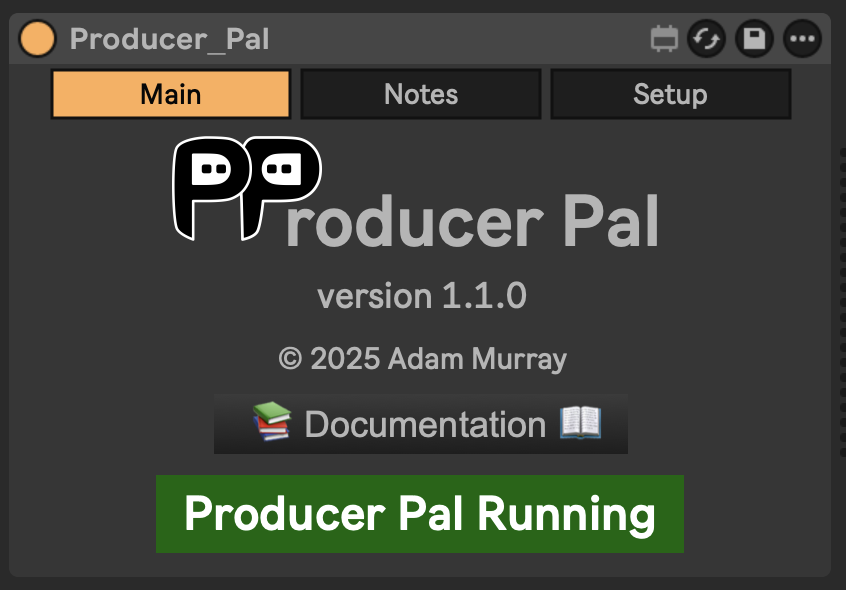

3. Install the Max for Live Device

Download Producer_Pal.amxd and drag it to a MIDI track in Ableton Live.

It should display "Producer Pal Running":

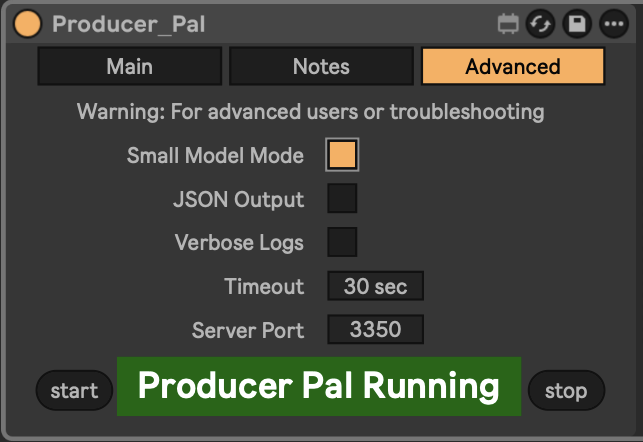

4. Enable Small Model Mode (Optional but Recommended)

In the Producer Pal "Setup" tab, enable Small Model Mode.

This provides a smaller, simpler interface optimized for small/local language models.

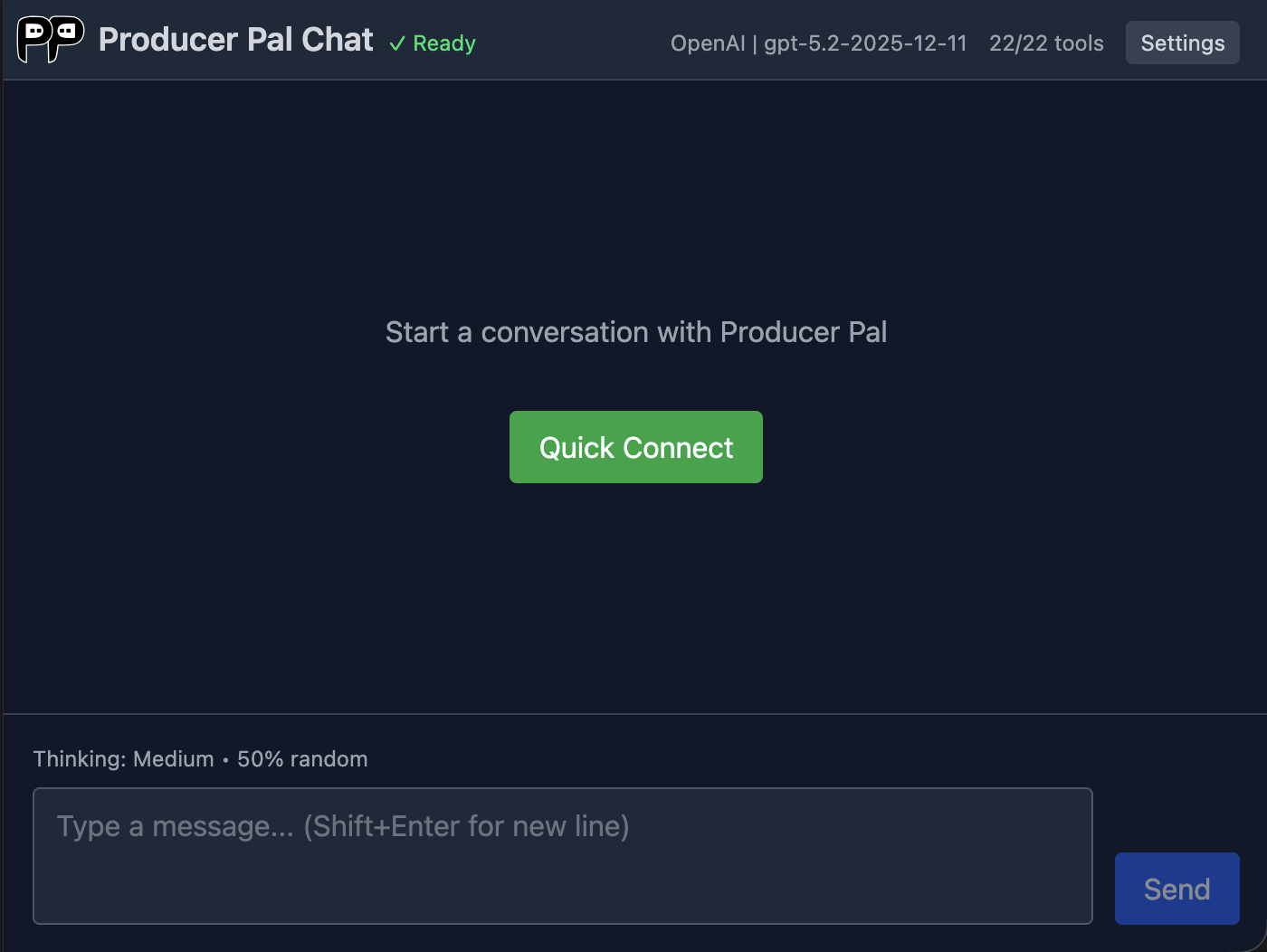

5. Open the Chat UI

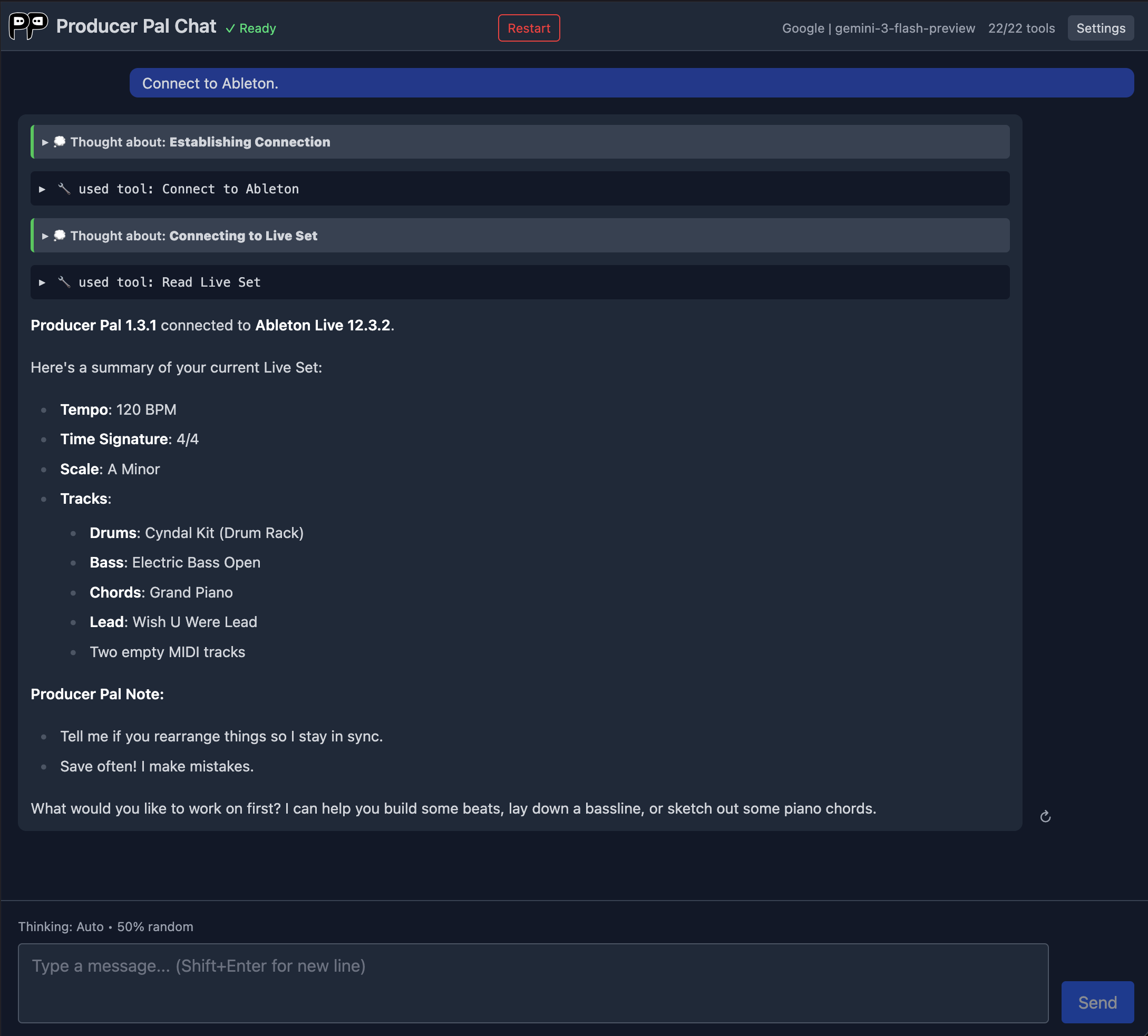

In the Producer Pal device's Main tab, click "Open Chat UI". The built-in chat UI opens in a browser:

6. Configure Ollama

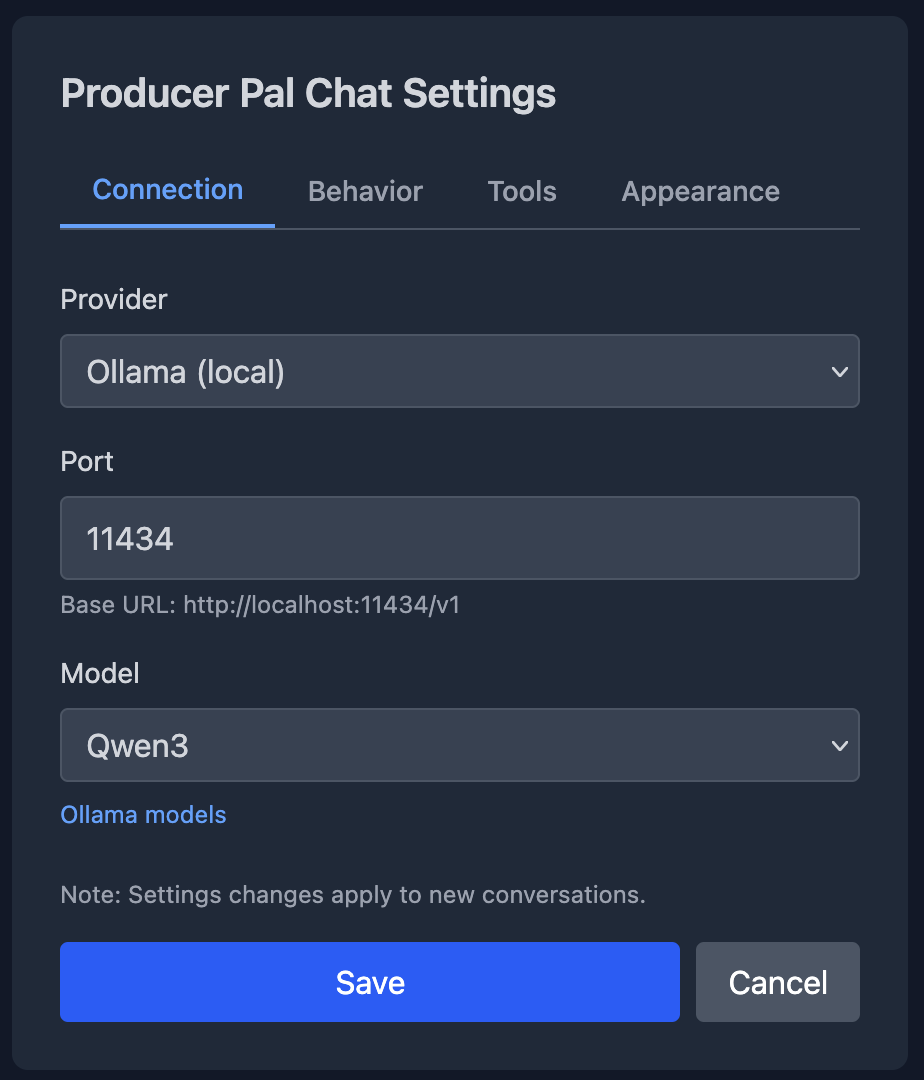

In the chat UI settings:

- Provider: Ollama (local)

- Port:

11434(default) - Model: Your model name (e.g.,

qwen3orqwen3:8b)

Click "Save".

Ollama Model Aliases

If Producer Pal says a model like qwen3 is not installed but you downloaded qwen3:8b, that's because Ollama aliases work one way: qwen3 points to qwen3:8b, but not vice versa. Install qwen3 in Ollama to create the alias. It won't re-download the model.

7. Connect

Click "Quick Connect" and say "connect to ableton":

Local Model Limitations

Local models work best for simple tasks. Complex edits may require more capable cloud models.

Model Compatibility

If the model responds with garbled text like <|tool_call_start|>... or says it can't connect to Ableton, the model doesn't support tools. Try a different model from the tools category.

Troubleshooting

If it doesn't work, see the Troubleshooting Guide.