Other Providers

The built-in chat UI supports OpenAI API, Mistral, OpenRouter, and custom OpenAI-compatible providers.

Pay-as-you-go Pricing

Most of these options (besides LM Studio) use pay-as-you-go pricing which can incur cost quickly with advanced models and long conversations. Monitor your API key usage.

Setup Steps

- Download Producer_Pal.amxd and drag it to a MIDI track in Ableton Live

- In the Producer Pal device, click "Open Chat UI"

- Configure your provider as described below

- Click "Quick Connect" and say "connect to ableton"

Available Providers

OpenRouter

OpenRouter is an "AI gateway" with hundreds of LLMs in one place. Includes free and pay-as-you-go options.

- Get an OpenRouter API key

- In the chat UI settings:

- Provider: OpenRouter

- API Key: Your key

- Model: e.g.,

anthropic/claude-sonnet-4,google/gemini-2.5-pro

Mistral

Mistral offers AI models developed in France. Free tier available with fairly aggressive quotas.

- Get a Mistral API key

- In the chat UI settings:

- Provider: Mistral

- API Key: Your key

- Model: e.g.,

mistral-large-latest

OpenAI API

OpenAI offers GPT models with pay-as-you-go pricing.

- Get an OpenAI API key

- In the chat UI settings:

- Provider: OpenAI

- API Key: Your key

- Model: e.g.,

gpt-4o,gpt-4.1

Subscription Alternative

Prefer flat-rate pricing? Codex CLI works with OpenAI's subscription plans.

Custom Providers

For other OpenAI-compatible providers:

- In the chat UI settings:

- Provider: Custom (OpenAI-compatible)

- API Key: Your provider's key

- Base URL: Your provider's API endpoint

- Model: The model name

Example: Groq

- Provider: Custom (OpenAI-compatible)

- Base URL:

https://api.groq.com/openai/v1 - Model:

llama-3.3-70b-versatile

Privacy Note

Your API key is stored in browser local storage. Use a private browser session if that concerns you, or delete the key from settings after use.

LM Studio

For free locally running models, you can use LM Studio with the built-in chat UI instead of LM Studio's native UI.

Install LM Studio and download a model that supports tools

Go to the LM Studio developer tab

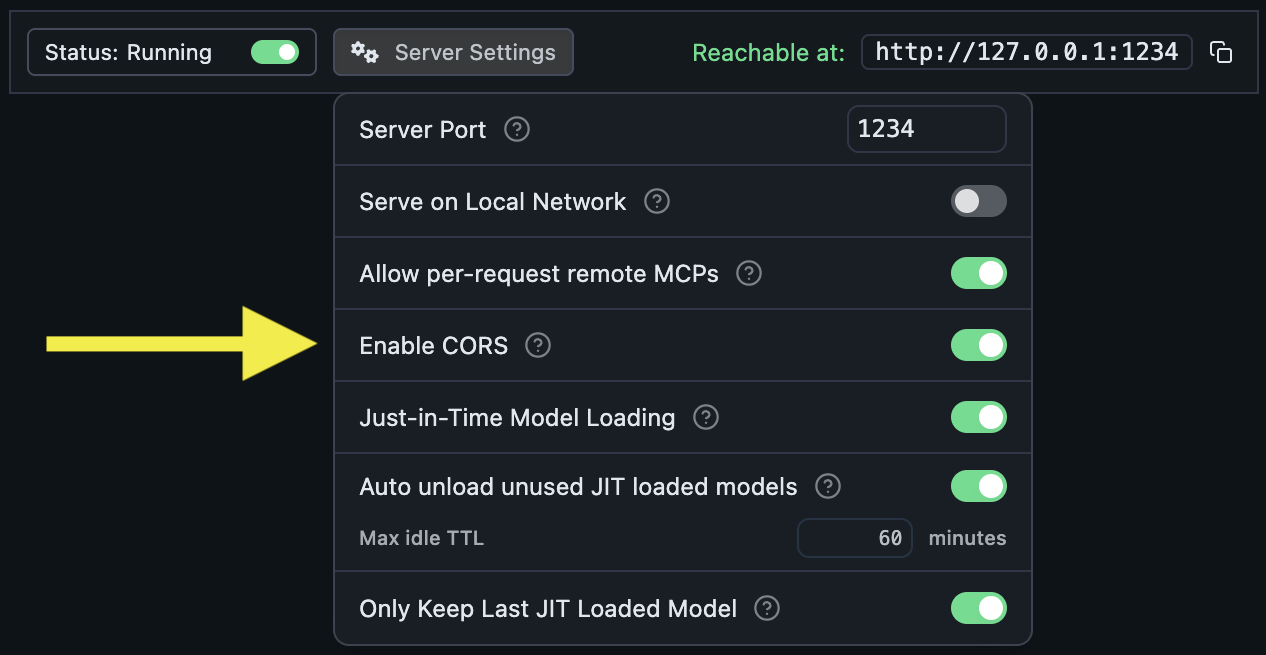

Open Server Settings and Enable CORS (required for browser access):

Start the LM Studio server (should say "Status: Running")

In the Producer Pal Chat UI settings:

- Provider: LM Studio (local)

- Port:

1234(default) - Model: A model that supports tools, such as

qwen/qwen3-vl-8b,openai/gpt-oss-20b, ormistralai/magistral-small-2509

Save and click "Quick Connect"

Model Tool Support

If the model responds with garbled text like <|tool_call_start|>... or says it can't connect to Ableton, the model doesn't support tools. Look for the hammer icon next to models:

![]()

Small Model Mode

Enable "Small Model Mode" in the Producer Pal Setup tab for better compatibility with local models. See LM Studio tips for more optimization advice.

Troubleshooting

If the built-in chat doesn't work, see the Troubleshooting Guide.